I participated yesterday in a team coding competition, Hash Code 2017. Teams were 2 to 4 people and, rather conveniently, it was possible to participate online (although it would have probably been a bit more fun to attend a nearby hub). I asked a few guys at work, but they all were busy for different reasons. In the end, I teamed up with an ex-colleague who does not even live in Berlin and decided that remote collaboration with Google Drive and Skype would have to be ok. However, a couple of minutes into the challenge, he got a work related call and had to drop the challenge. I don´t think he even finished reading it!

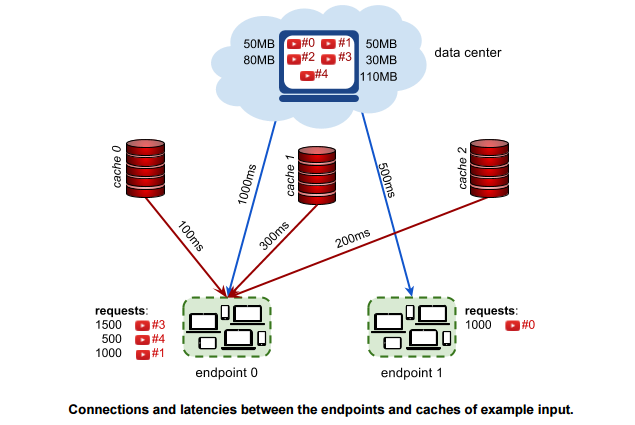

I decided to press on alone and spent the next four hours trying not to end with a blank score. The challenge was about optimizing cache servers for YouTube. Cache servers are constrained by size but offer smaller latency times than far away data servers. The question is which videos to store in each cache server based on expected usage.

I started working on the code to read the data in and after that, I went to the chapter on optimization of my dearest Python book. I noticed today that I should have searched online for this (as rather predictable it was available in MITOCW), but I simply copied the code from the book and modified it to fit my input. In my case, for each cache server there is a loop going through all the requests and endpoints. With the knapsack problem model in mind, the value is the number of times a video is called times the latency saving, the weight is the size of the video and the constraint is the server size. Unfortunately I did not do any early submissions to check which option would give me a better score (value, weight or density). I went for density but I just checked now and I should have gone for value (that alone would have given me an extra hundred positions in the scoreboard, i.e. out of the very deep bottom up into the deep bottom). Whatever, by the time I was there I was at least happy to have something to submit. My computer slowly cranked up through the next problem set, but I had not had time to do any data exploration, so I pretty much blindly fed it into the algorithm. With a bigger team, I think we could have explored other options. Maybe next time. The computer somehow managed to finish the second problem set (out of four) at 22:29 but I could not make it to the submission as the deadline was at 22:30!. Final rush but unlike in the films, I missed it by a couple of seconds.

The chart below shows endpoints to the left and cache servers and data center to the right. As it can be seen, the algorithm I used did not really capture well many of the videos requested and three cache servers did not even get populated (they actually did but the list of videos was duplicated with other servers linked to the same endpoints, as this kind of finesse was not considered in my code). All in all, with a bit more time, there are a couple of tweaks which I could have done for a better solution, but the challenge was never meant for a single person!

So, summary line, really good fun and a lot left to learn!

No comments:

Post a Comment